Component Visualizers for Unreal Engine Editor

Introduction

Have you ever created a custom scene component with some complex logic and no visible representation and wished you could visualize that logic in the editor without burdening your game? Actually, you can! This can be achieved with the help of visualizers in Unreal Engine.

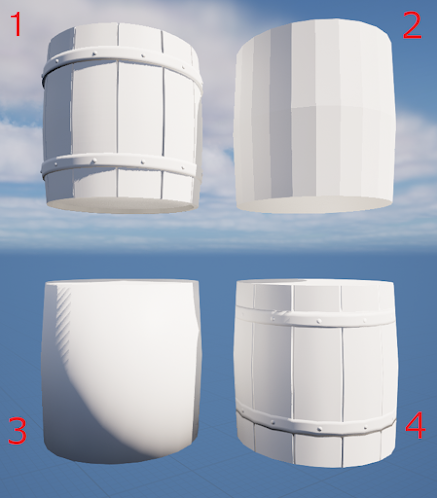

Please, take a look at this image. Can you see those yellow wire spheres with numbers? These are my new custom visualizers for my custom component used in an actor blueprint.

|

| Custom component visualizers in action! |

Instructions

Here is a simple tutorial to achieve this result:

- Because these visualizers are to be used only in the UE Editor, they have to be implemented in the Editor module of your project. If you don't have one yet, please follow these instructions to make one.

- Next, make a subclass of the FComponentVisualizer class that will draw your custom visuals and override its DrawVisualization/DrawVisualizationHUD functions. This article by Matt gives superb instructions on implementing FComponentVisualizer subclasses.

That's it! Easy isn't it? In just an hour I made my first custom wire sphere visualizer. And in a couple hours more I filled them with text. Why so long, you ask?

Text visualizers

It was my first time working with an editor module and visualizers and it took me some time to figure out how to draw a text in the world.

The catch was that the text should be drawn not in world space but on HUD in screen space. Luckily the FComponentVisualizer API provides us with everything necessary. This little code snipper did the job:

bool FFireSpawnLocationVisualizer::ProjectWorldToScreen(const FSceneView* View, const FVector& Location, FVector2D& OutScreenPosition) { bool bResult = FSceneView::ProjectWorldToScreen( FVector4(Location, 1), View->CameraConstrainedViewRect, View->ViewMatrices.GetViewProjectionMatrix(), OutScreenPosition ); if (!bResult) { UE_LOG(LogTemp, Warning, TEXT("ProjectWorldToScreen failure.")); return false; } return true; }

The next thing that blocked me was where do I get a font that was necessary to draw my text? All my old tricks didn't work and I had to learn a new one:

UFont* Font = UEngine::GetLargeFont();

And here is the complete source code, if you have more questions:

/////////////////////////////////////////// FireSpawnLocationVisualizer.h: #pragma once #include "CoreMinimal.h" #include "ComponentVisualizer.h" class FIREFIGHTERWIZARDEDITOR_API FFireSpawnLocationVisualizer : public FComponentVisualizer { public: FFireSpawnLocationVisualizer(); ~FFireSpawnLocationVisualizer(); virtual void DrawVisualization(const UActorComponent* Component, const FSceneView* View, FPrimitiveDrawInterface* PDI) override; virtual void DrawVisualizationHUD(const UActorComponent* Component, const FViewport* Viewport, const FSceneView* View, FCanvas* Canvas) override; private: UPROPERTY() UFont* Font; static bool ProjectWorldToScreen(const FSceneView* View, const FVector& Location, FVector2D& OutScreenPosition); }; /////////////////////////////////////////// FireSpawnLocationVisualizer.cpp: #include "FireSpawnLocationVisualizer.h" #include "../FireSpawnLocation.h" #include <CanvasTypes.h> #include <UnrealEdGlobals.h> #include <Engine/Font.h> FFireSpawnLocationVisualizer::FFireSpawnLocationVisualizer() { Font = UEngine::GetLargeFont(); } FFireSpawnLocationVisualizer ::~FFireSpawnLocationVisualizer() { } void FFireSpawnLocationVisualizer::DrawVisualization(const UActorComponent* Component, const FSceneView* View, FPrimitiveDrawInterface* PDI) { const UFireSpawnLocation* MyComponent = Cast<UFireSpawnLocation>(Component); if (!MyComponent) { return; } const double Radius = 20.0; const int32 NumSides = 12; const uint8 DepthPriority = -1; const float LineThickness = 1; DrawWireSphere( PDI, MyComponent->GetComponentLocation(), FLinearColor::Yellow, Radius, NumSides, DepthPriority, LineThickness ); } void FFireSpawnLocationVisualizer::DrawVisualizationHUD(const UActorComponent* Component, const FViewport* Viewport, const FSceneView* View, FCanvas* Canvas) { const UFireSpawnLocation* MyComponent = Cast<UFireSpawnLocation>(Component); if (!MyComponent) { return; } FVector2D ScreenPosition; if (!ProjectWorldToScreen(View, MyComponent->GetComponentLocation(), ScreenPosition)) { return; } Canvas->DrawShadowedText( ScreenPosition.X, ScreenPosition.Y, FText::AsNumber(MyComponent->SpawnOrder), Font, FLinearColor::Yellow ); } bool FFireSpawnLocationVisualizer::ProjectWorldToScreen(const FSceneView* View, const FVector& Location, FVector2D& OutScreenPosition) { bool bResult = FSceneView::ProjectWorldToScreen( FVector4(Location, 1), View->CameraConstrainedViewRect, View->ViewMatrices.GetViewProjectionMatrix(), OutScreenPosition ); if (!bResult) { UE_LOG(LogTemp, Warning, TEXT("ProjectWorldToScreen failure.")); return false; } return true; }

Conclusion

I definitely intend to use this technique extensively in the game project I'm working on. If you are interested in a more complex usage example and a more in-depth explanation, please also read this article by Quod Soler.

Happy GameDeving, everyone!